About Me

Hi, my name is Gukyeong Kwon. I am a Senior Applied Scientist at Amazon Artificial General Intelligence (AGI). I completed M.S. and Ph.D. in School of Electrical and Computer Engineering at Georgia Tech in 2018 and 2021, respectively, under the supervision of Dr. Ghassan AlRegib. My research interests are machine learning, computer vision, and multimodal foundation models.

Please check my CV and Google scholar profile for more information.

News:

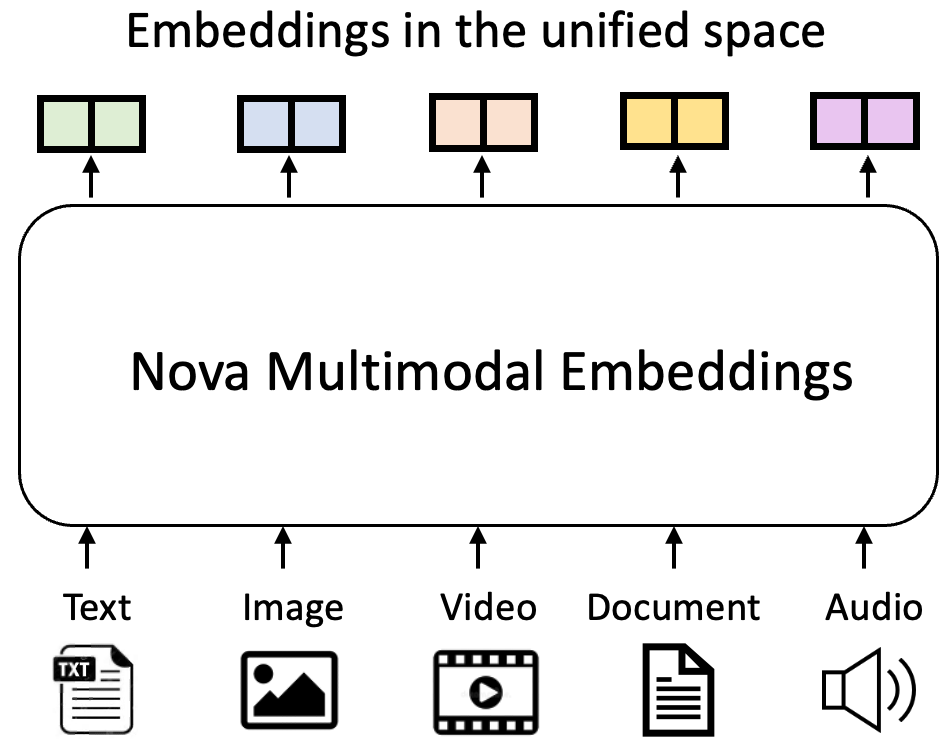

- Oct. 23, 2025: Amazon Nova Multimodal Embeddings model is launched.

- May 10, 2025: I am recognized as a CVPR 2025 Outstanding Reviewer.

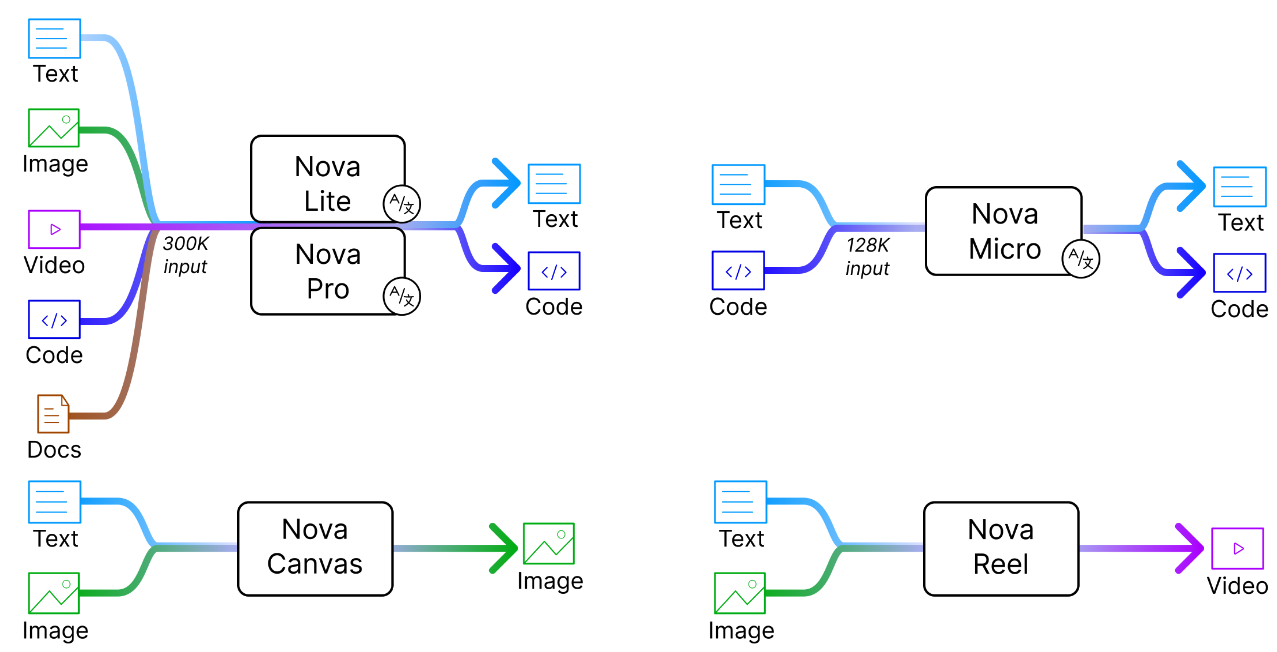

- Dec 3, 2024: Amazon Nova Multimodal Foundation model is launched.

- Nov. 29, 2023: Amazon Titan Multimodal Embeddings model is launched.

- Oct. 9, 2023: I am promoted to Senior Applied Scientist at Amazon.

- May 16, 2023: I am recognized as a CVPR 2023 Outstanding Reviewer.

- Jan. 20, 2023: One paper is accepted for publication at ICLR 2023.

- May 30, 2022: I receive a CSIP Outstanding Research Award in recognition of my Ph.D. research at Georgia Tech.

- Jan. 30, 2022: One paper is accepted for publication at IEEE Transactions on Image Processing.

- Jan. 11, 2021: I am joining Amazon Web Services (AWS) AI Labs as a full-time Applied Scientist.

- Oct. 28, 2020: Our paper received the Top Viewed Special Session Paper Award at ICIP 2020.

- Jul. 3, 2020: Our papers is accepted for publication at ECCV 2020.

- May 15, 2020: Two papers are accepted for publication at ICIP 2020.

- Sept. 24, 2019: Our paper won the Best Paper Award (top 0.1%) at the IEEE International Conference on Image Processing (ICIP) 2019.

- Apr. 30, 2019: Our paper is accepted for publication at ICIP 2019.

- May 4, 2018: Our paper is accepted for publication at ICIP 2018.

- Nov. 14, 2017: Our paper is accepted for publication at the 31st Conference on Neural Information Processing Systems (NIPS), Machine Learning for Intelligent Transportation Systems Workshop, 2017.

- Mar. 29, 2017: Our paper is selected as a Finalist of the World’s FIRST 10K Best Paper Award (top 3%) in the IEEE International Conference on Multimedia and Expo (ICME) 2017.

Experience

Amazon AGI

Senior Applied Scientist

October 2023 - Present

- Contributed to Amazon Nova Multimodal Foundation models which achieve frontier intelligence in diverse vision and language tasks.

- Led the launch of Amazon Nova Multimodal Embeddings model, a state-of-the-art multimodal embedding model which is the first embeddings models to support five modalities as input (text, documents, images, video and audio) for agentic RAG and semantic search applications.

AWS AI Labs

Applied Scientist

January 2021 - October 2023

- Developed Amazon Titan Multimodal Embeddings model which can perform accurate and contextually relevant vision language search.

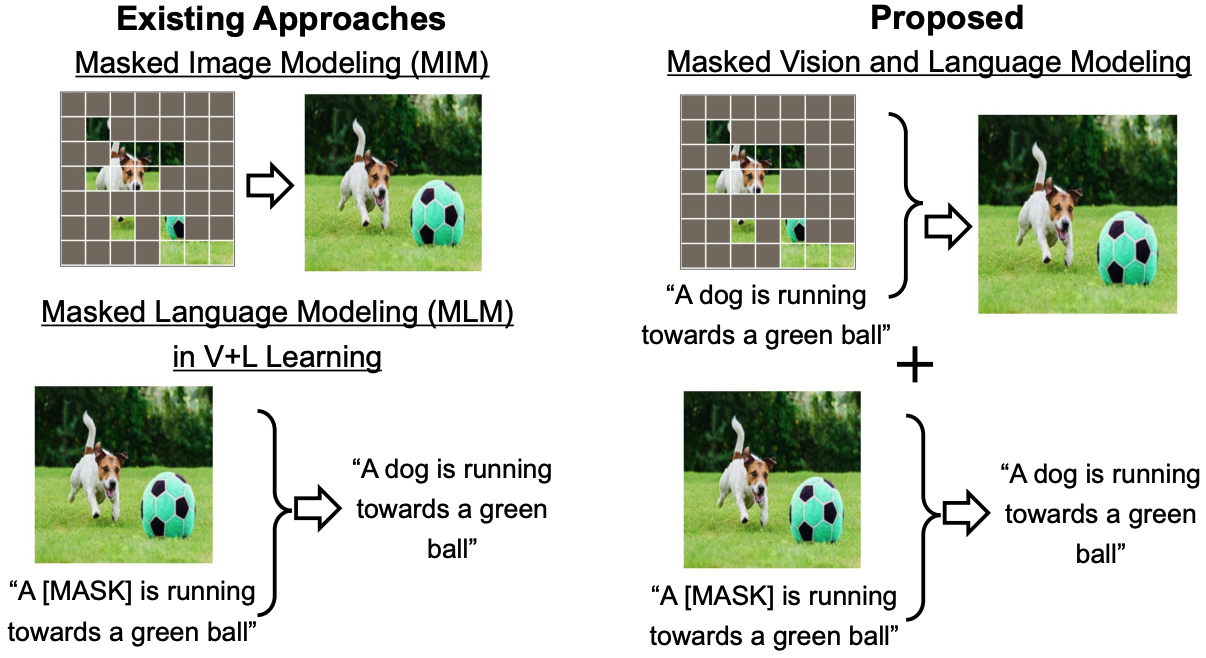

- Conducted research on vision and language representation learning. Masked Vision and Language Modeling (MaskVLM) was proposed and accepted for publication at ICLR 2023.

AWS AI Labs

Applied Scientist Intern

May 2020 - August 2020

- Developed regularization techniques for vision and language models and achieved improved performance in visual question answering, caption-based image retrieval, and referring expressions.

Panasonic Automotive

Deep Learning Research Intern

May 2018 - July 2018

- Developed deep learning-based algorithms for drivers’ misbehavior detection in autonomous vehicles.

- Focused on driver’s pose estimation and hand detection algorithms using Tensorflow and C++.

Georgia Tech

Graduate Research/Teaching Assistant

January 2016 - December 2020

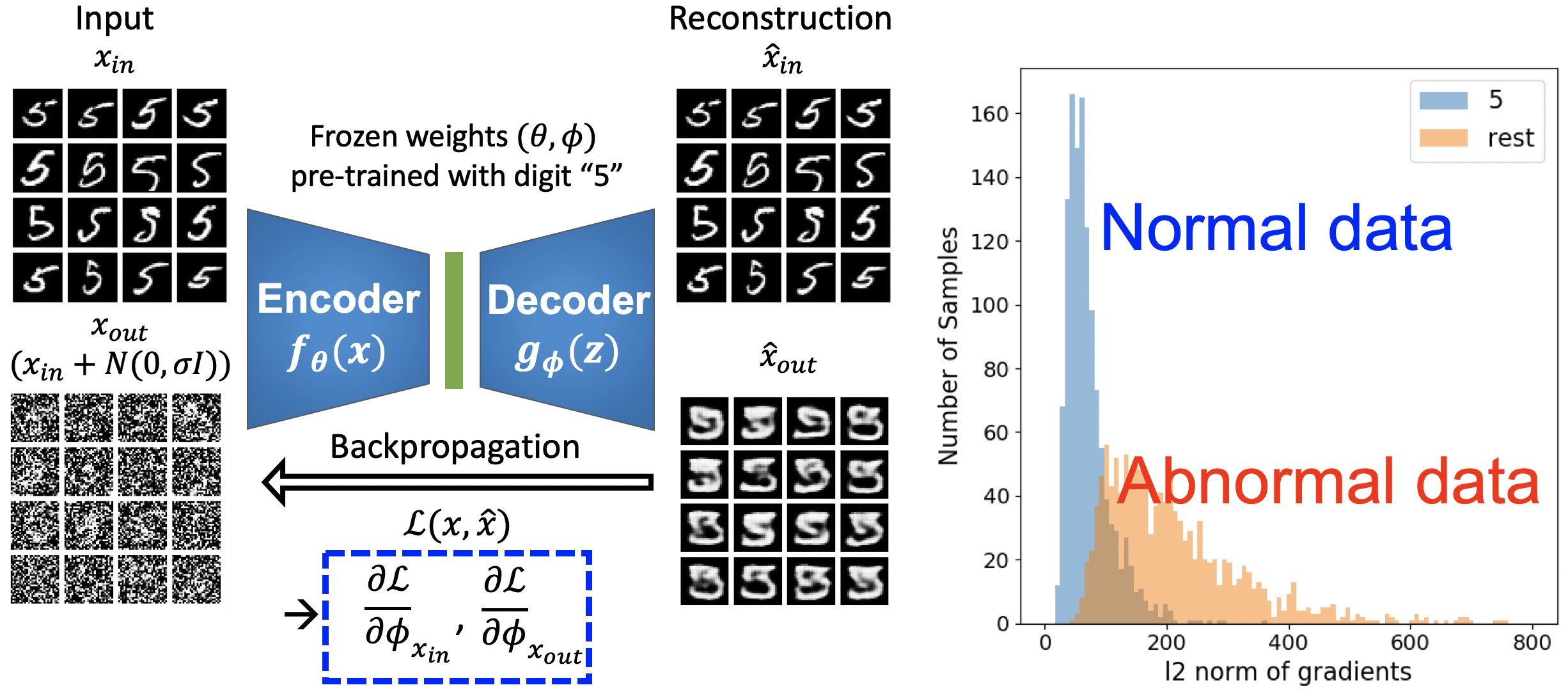

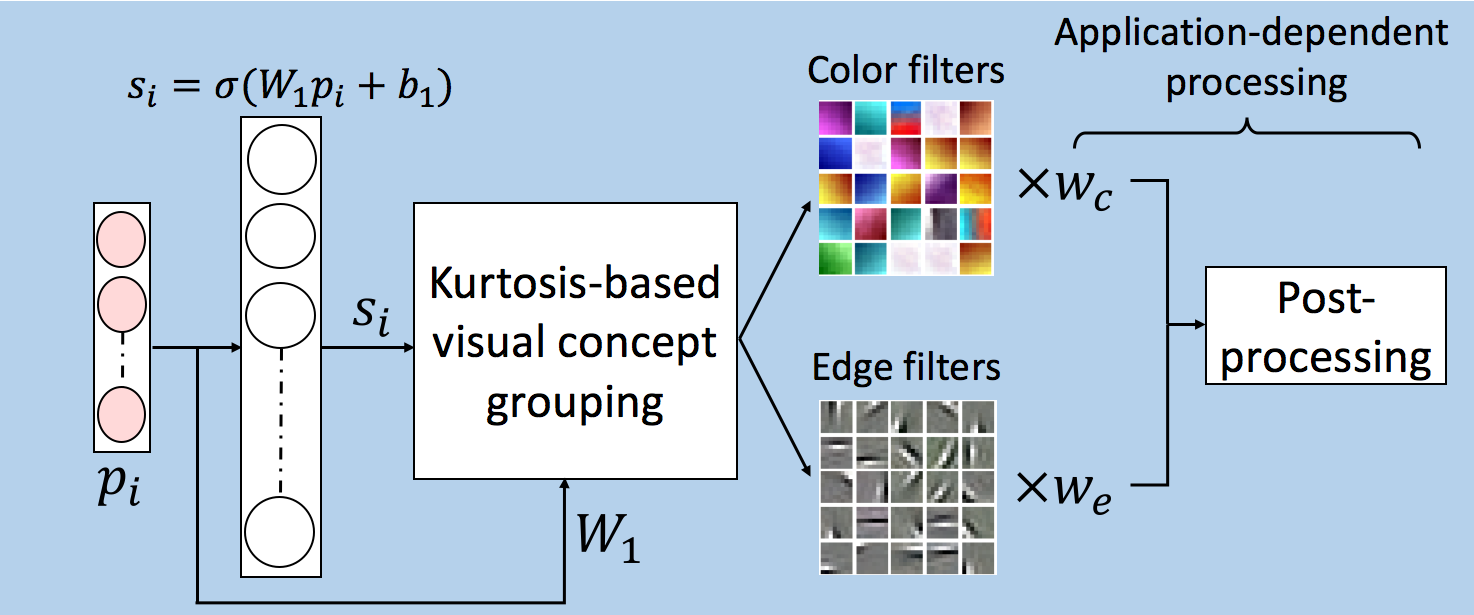

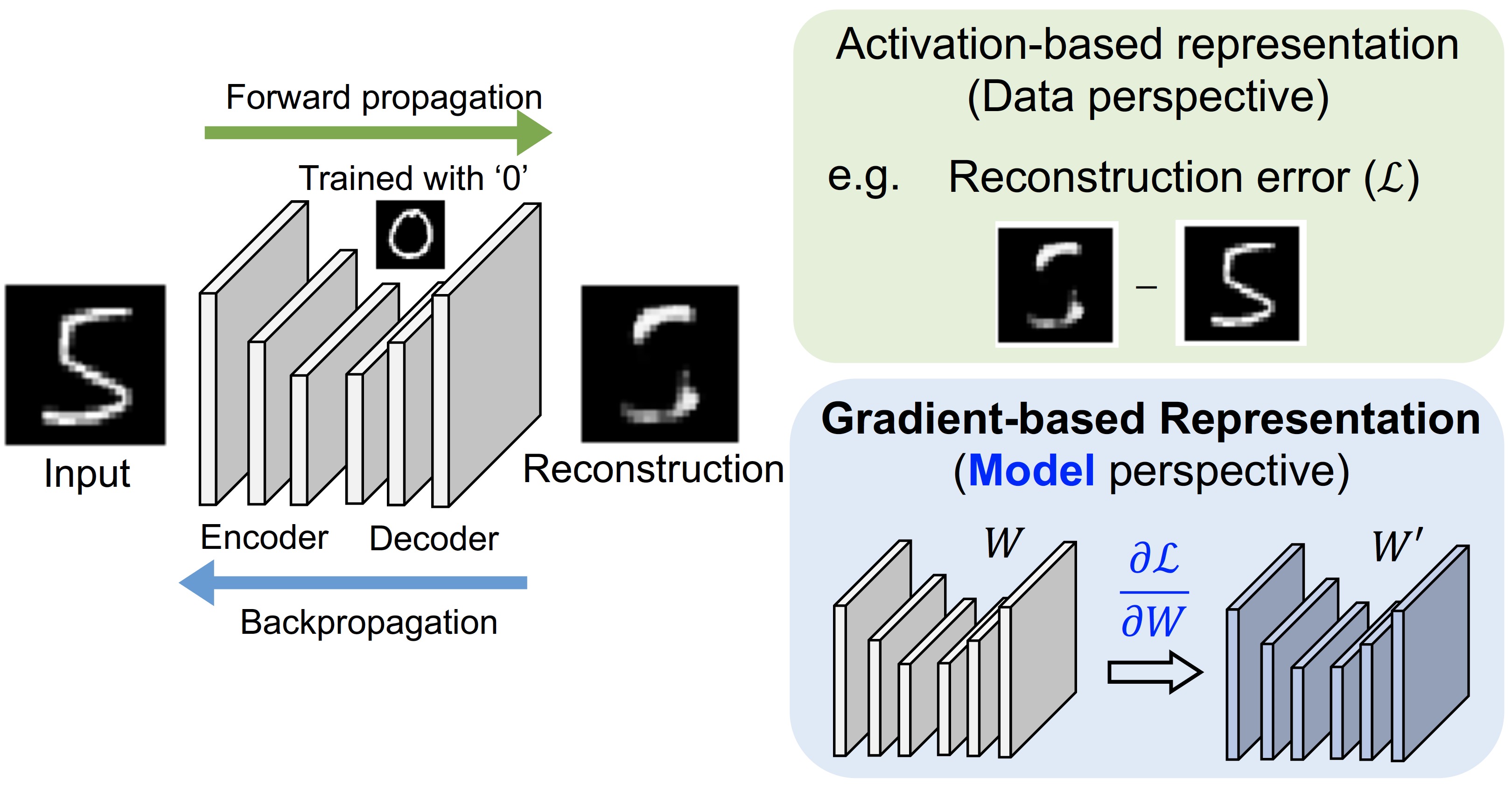

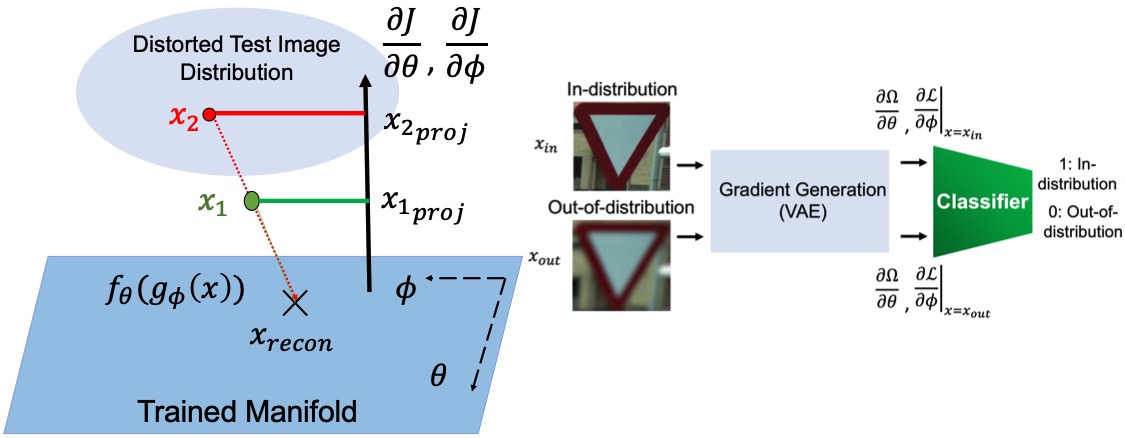

- Developed an anomaly detection algorithm using gradient-based representations which characterize knowledge that the model has not learned.

- Conducted research on accident event detection algorithms to detect abnormal situations in driving scenarios and ensure safe autonomous driving.

- Introduced a large-scale traffic sign recognition dataset for robust visual understanding under challenging conditions.

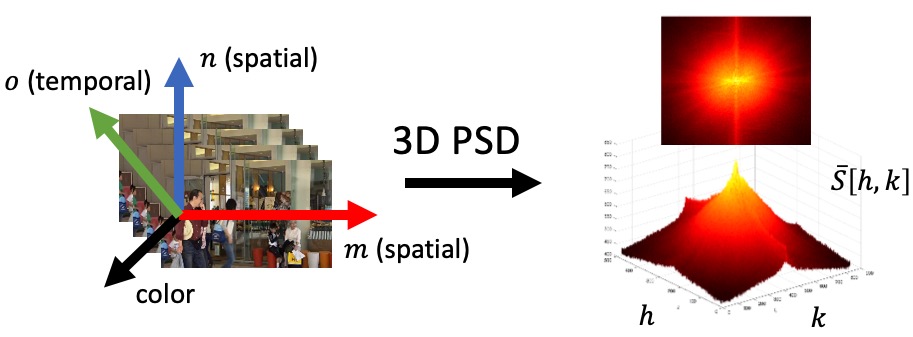

- Developed a perceptual video quality assessment (VQA) metric which achieved the state-of-the-art performance in estimating the impact of visual distortions on human perception.

Publications

Amazon Artificial General Intelligence, “Amazon Nova Multimodal Embeddings: Technical Report and Model Card,” Tech report, 2025.

Amazon Artificial General Intelligence, “The Amazon Nova Family of Models: Technical Report and Model Card,” Tech report, 2024.

G. Kwon, Z. Cai, A. Ravichandran, E. Bas, R. Bhotika, and S. Soatto, “Masked Vision and Language Modeling for Multi-modal Representation Learning,” International Conference on Learning Representations (ICLR), 2023.

G. Kwon, M. Prabhushankar, D. Temel, and G. AIRegib, “Backpropagated Gradient Representations for Anomaly Detection,” In Proceedings of the European Conference on Computer Vision (ECCV), 2020.

G. Kwon*, M. Prabhushankar*, D. Temel, and G. AIRegib, “Distorted Representation Space Characterization Through Backpropagated Gradients,” 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 2019, pp. 2651-2655. (* : equal contribution, Best Paper Award (top 0.1%))

M. A. Aabed, G. Kwon, G. AlRegib, “Power of tempospatially unified spectral density for perceptual video quality assessment,” 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, 2017, pp. 1476-1481. (Finalist of the World’s FIRST 10K Best Paper Award (top 3%))